American scientists have achieved a major nuclear energy milestone but questions remain about how the technology can be applied

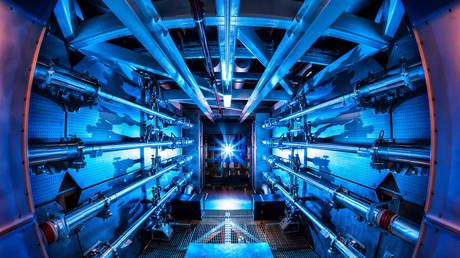

The United States Department of Energy announced last week that on December 5 scientists at the National Ignition Facility (NIF), a complex at the Lawrence Livermore National Laboratory in California, achieved a key advance in nuclear fusion.

For over a decade, researchers at this facility have been trying to produce a fusion reaction that generates more energy than it consumes – and they now say they’ve reached that milestone, which has eluded nuclear scientists for almost 60 years.

Achieving effective fusion reactions has been the dream of nuclear scientists for decades. It works by fusing elements like hydrogen or helium into heavier elements, creating vast amounts of clean energy in the process. It’s the same process that powers the sun – and it’s a vastly superior process to current technology using nuclear fission, e.g., splitting atoms rather than fusing them, because it also produces less nuclear waste. That’s been a major roadblock to the further adoption of nuclear fission reactors, along with several high-profile nuclear accidents such as the infamous Chernobyl disaster.

The energy department’s PR team has been touting this development, and rightfully so, because it is incredibly groundbreaking for the future of energy technology. Still, there are some lingering questions about what it actually means.

As far as what’s being claimed, the DoE says that the adoption of fusion energy could play a key role in combating climate change by producing clean and safe electricity without the attendant greenhouse gas emissions. But its widespread usage in the US energy grid is likely decades away. Realistically, without another major breakthrough, fusion won’t be a major source of energy until the 2060s or 2070s, according to Tony Roulstone, a nuclear engineer at Cambridge University, who spoke to National Public Radio (NPR).

So at that rate, fusion won’t be a major part of the US energy portfolio until after 2050 when the administration of President Joe Biden says the US will achieve net zero greenhouse admissions. Experts widely agree that this goal is crucial to avoiding the worst possible ramifications of climate change – and while fusion could greatly expand the probability that this happens, it isn’t feasible at this point.

Along with the practical problems of rolling out the technology, there’s also the question of who will actually do it. Since this breakthrough happened via taxpayer funds at a government-run research facility through the DoE, we can assume that the patent is held by the federal government. However, the US government in particular often lets private companies use these patents and waives its legal rights to set price caps. Part of this is rooted in the fact the government doesn’t have the capacity to actually build and roll out this technology itself. However, it could use the Defense Production Act (DPA) to temporarily take control of domestic industries — especially if the president declared climate change a national emergency.

This was a major point of contention with Covid-19 vaccines during the pandemic. The federal government resisted calls to allow manufacturers to produce mRNA vaccines, which were produced via taxpayer-funded research, even though it would have streamlined the process of vaccine rollout for many countries around the world, particularly those in the Global South. (However, both the Biden and Trump administrations did invoke the DPA to stockpile medical supplies during the pandemic).

For example, BioNTech, the company that actually developed the Pfizer vaccine, received a $455 million grant from the German government and got around $6 billion in purchase commitments from the US and EU. The Pfizer vaccine is also based on mRNA technology patented by the National Institutes of Health, the research behind it having been funded by US taxpayers. As Covid-19 funds in the country dry up, US residents are now having to pay for access to the vaccines that their tax dollars actually helped create in the first place, leaving many without access.

In fact, if you look at pretty much every major consumer technology in US history, you will find some high-level involvement of public funds or government research and development (R&D). This is especially true for the medical field, telecommunications, aviation and other high-tech fields. Yet for many people, access to this technology is not readily available because a lot of poor and working-class folks are priced out. The intellectual property rights of publicly funded technology is not handed over to the public domain (as it ought to be) but are routinely given, without any legal basis, to private companies.

Because of the profit margins that could be created for nuclear fusion technology, it seems almost inevitable that a private company will use the patent to commercialize its usage rather than making it a publicly available good. And that will inevitably, and artificially, further hamper its widespread adaptation into the US energy grid (not to mention the global energy grid), thus making this breakthrough essentially useless for the vast majority of the world’s population. In order for fusion to have a noticeable impact in the fight against climate change, it actually has to be used.

But if a major nuclear company like Westinghouse somehow stakes a claim on this technology, then it will be extremely limited in access. And moreover, given the way the US government partners with private companies in international public tenders, it could be another geopolitical tool used in Washington’s high-tech trade wars like the ongoing one against China. That is to say that the technology could be — and most likely will be — kept out of the public’s hands unless the federal government reverses decades of precedent by actually flexing its legal right to set prices conducive to accessibility rather than private profit.